iOS 18.2 has a child safety feature that can block nude content and report it to Apple

In iOS 18.2, Apple adds a new feature that evokes another purpose behind its default CSAM scanning programs – this time, without breaking the final encryption or providing government backdoors. Rolling out first in Australia, the company’s expansion of its Communication Security feature uses machine learning on the device to detect and block nudity content, add warnings and require authentication before users can proceed. If a child is under 13, they cannot continue without entering the device’s Screen Time passcode.

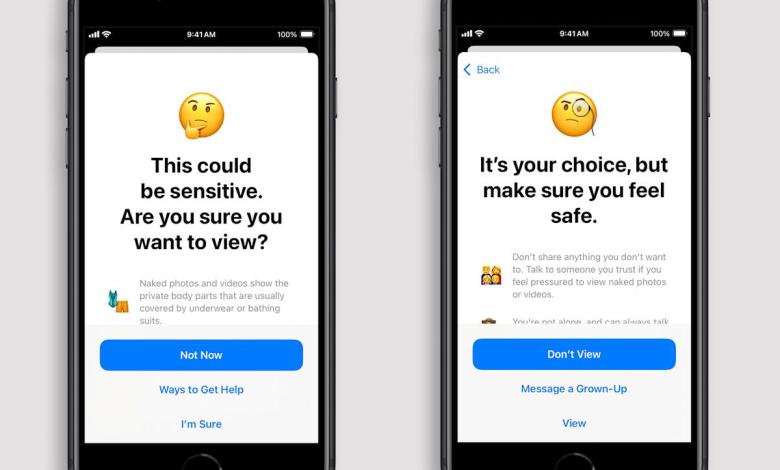

If the device’s scanner detects nude content, the feature automatically blurs the image or video, displays a warning that the content may be sensitive and offers ways to get help. Options include leaving a chat or group thread, blocking someone and accessing online safety resources.

The feature also displays a message that reassures the child that it is okay not to view the content or leave the conversation. There is also an option to send a message to a parent or guardian. If the child is 13 or older, they can still make sure they want to continue after receiving those warnings – with repeated reminders that it’s okay to go out and that more help is available. In accordance with The guardincludes the option to report photos and videos to Apple.

The feature analyzes iPhone and iPad photos and videos in Messages, AirDrop, Contact Posters (in the Phone or Contacts app) and FaceTime video messages. In addition, it will scan for “other third-party applications” when a child selects a photo or video to share.

Supported applications differ slightly for other devices. On the Mac, it scans Messages and other third-party apps when users choose content to share. On Apple Watch, it includes Messages, Contact Posters and FaceTime video messages. Finally, in Vision Pro, the feature scans Messages, AirDrop and other third-party applications (under the same conditions mentioned above).

The feature requires iOS 18, iPadOS 18, macOS Sequoia or visionOS 2.

The guard reports that Apple plans to expand it globally after the Australian case. The company may have chosen Earth Down for a reason: The country is set to issue new laws requiring Big Tech to police child abuse and terrorist content. As part of the new rules, Australia agreed to add a clause to only authorize “where technically feasible,” giving up the requirement to break end-to-end encryption and pose a security risk. Companies will need to comply by the end of the year.

User privacy and security have been the focus of controversy over Apple’s ill-fated attempt to police CSAM. In 2021, it prepared to adopt a system that would scan online sexual harassment images, which would then be sent to human reviewers. (It came as a shock after Apple’s history of resisting the FBI over its efforts to unlock the iPhone of a terrorist group.) Privacy and security experts argue that the feature would open a back door for authoritarian regimes to spy on their citizens. in situations without exploitative substances. The following year, Apple ditched the feature, leading (indirectly) to the more modest child safety feature announced today.

Once it’s released globally, you can turn on the feature below Settings > Screen Time > Communication Security, and turn on the option. That section has been enabled by default since iOS 17.

Source link

/cdn.vox-cdn.com/uploads/chorus_asset/file/25728924/STK133_BLUESKY__B.jpg?w=390&resize=390,220&ssl=1)