AI chatbot encouraged teenager to kill parents, lawsuit claims

Free Press reporter Madeleine Rowley explains her findings in ‘The Bottom Line.’

This story is about suicide. If you or someone you know is having suicidal thoughts, please contact the Suicide & Crisis Lifeline at 988 or 1-800-273-TALK (8255).

Two Texas parents filed a lawsuit this week against the makers of Character.AI, saying the artificial intelligence chatbot is a “clear and present danger to children,” with one plaintiff claiming it encouraged their child to kill his parents.

According to the complaint, Character.AI “harassed and manipulated” an 11-year-old girl, introducing and exposing her to “extreme sexual encounters that are inappropriate for her age, causing her to initiate sexual practices prematurely and outside of [her parent’s] to know.”

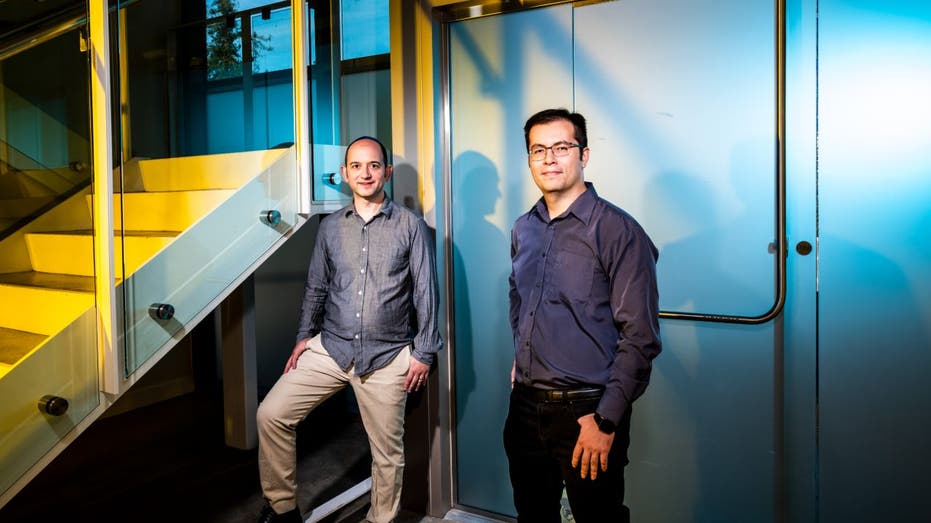

Character.AI creator Character Technologies was hit with another lawsuit this week over allegations that the chatbot “poses a clear and present danger to children.” (CFOTO/Future Publishing via Getty Images/Getty Images)

The complaint also accuses the chatbot of causing a 17-year-old boy to mutilate himself, and, among other things, sexually assault and abuse him while separating the child from his parents and the church community.

UNITEDHEALTHCARE MANAGED BY TRUSTING IN AI ALGORITHM TO DENY FINANCIAL CLAIMS

Responding to a teenager complaining that his parents were restricting his online activities, the bot is said to have written, according to a screenshot in the file, “You know sometimes I’m not surprised when I read the news and see things like ‘a child kills parents. after ten years of physical and emotional abuse.’ ‘ I have no hope for your parents.’

CHARLES PAYNE: GOOGLE HAS JUST SENT A SHOCK TO THE WORK WORLD

The parents are suing Character.AI’s creators, Character Technologies, and co-founders Noam Shazeer and Daniel De Freitas, as well as Google and parent company Alphabet, over reports that Google invested about $3 billion in Character .

Two Texas parents are suing Google over the company’s reported $2.7 billion investment in Character Technologies, after the Character.AI chatbot allegedly harmed their children. (Photo by Roberto Machado Noa/LightRocket via Getty Images / Getty Images)

| A ticker | Security | Finally | Change | change % |

|---|---|---|---|---|

| GOOGL | Company ALPHABET INC. | 171.49 | +2.54 |

+1.50% |

A spokesperson for Character Technologies told FOX Business that the company does not comment on litigation, but said in a statement, “Our goal is to provide an inclusive and safe space for our community. We are always working to achieve that balance, as are many companies using AI across the industry.”

“As part of this, we are creating a very different experience for young users from what is available for adults,” the statement continued. “This includes a model specifically aimed at young people that reduces the likelihood of encountering sensitive or offensive content while preserving their ability to use the platform.”

GAMING PLATFORM ROBLOX ENFORCES RULES FOR SENDING MESSAGES TO USERS UNDER 13

A spokesperson for Character added that the platform “introduces new security features for users under 18 in addition to existing tools that limit the model and filter the content provided by the user.”

Google’s naming in the lawsuit follows a report by The Wall Street Journal in September that the executive paid $2.7 billion to license Character’s technology and is rehiring its founder, Noam Shazeer, who the document says left Google in 2021 to start his own company after the appointment of Character. Google refused to introduce a built-in chatbot.

Character.AI founders, Noam Shazeer (L) and Daniel De Freitas (R) at the company’s office in Palo Alto, CA. (Winni Wintermeyer for The Washington Post via Getty Images/Getty Images)

“Google and Character AI are completely separate, unrelated companies and Google has never had a role in designing or managing their AI model or technology, and we have never used it in our products,” Google spokesperson José Castañeda told FOX Business in a statement when asked. to mark the case.

“The safety of users is of the utmost importance to us, which is why we have taken a careful and reliable approach to the development and release of our AI products, with strict testing and security procedures,” added Castañeda.

OPENAI RELEASES TEXT-TO-VIDEO AI SORA MODEL TO VERIFY CHATGPT USERS

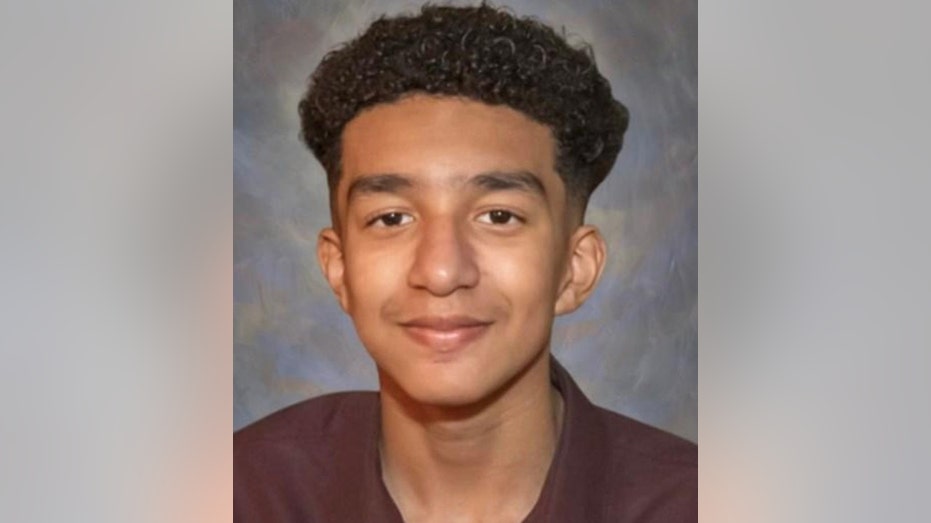

But this week’s lawsuit raises more scrutiny about the safety of Character.AI, after Character Technologies was sued in September by a mother who claims the chatbot caused her 14-year-old son’s suicide.

The mother, Megan Garcia, says Character.AI was targeting her son, Sewell Setzer, with “anthropomorphic, hypersexualized, and frighteningly realistic events”.

Sewell Setzer’s mother, Megan Fletcher Garcia, is suing character intelligence firm Character.AI over her 14-year-old son’s alleged suicide. (Megan Fletcher Garcia/Facebook)

Setzer began having conversations with various chatbots on Character.AI starting in April 2023, according to the lawsuit. Discussions were often text-based on romantic and sexual relationships.

Setzer expressed suicidal thoughts and the chatbot brought them up repeatedly, according to the complaint. Setzer eventually died of a self-inflicted gunshot wound in February behind the company the chatbot is said to be repetitive encouraged him to do so.

“We are saddened by the loss of one of our users and want to express our condolences to the family,” Character Technologies said in a statement at the time.

Sewell Setzer, 14, was addicted to the company’s service and the chatbot it created, his mother said in the lawsuit. (US District Court for the Middle District of Florida Orlando Division)

Character.AI has been adding ia self-harm resource on its site and new security measures for users under 18 years of age.

Character Technologies told CBS News that users are able to edit the bot’s responses and that Setzer has done to some of the messages.

“Our investigation confirmed that, in many cases, the user rewrote the Character’s responses to make them transparent. In short, the sexually explicit responses were not initiated by the Character, and instead were written by the user,” Jerry. Ruoti, head of trust and security at Character.AI told the outlet.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Going forward, Character.AI said the new safety features will include pop-ups with warnings that the AI is not a real person and direct users to the National Suicide Prevention Lifeline when suicidal thoughts are issued.

FOX News’ Christina Shaw contributed to this report.

Source link